Does x86 Need to Die?

A recent article claimed that "x86 needs to die". Does it? I was on ThePrimeagen's live stream last week to talk about it.

As many of you already know, I am a big fan of ThePrimeagen’s Twitch channel. I’ve been on it a few times now, and at least from my point of view as a guest, it consistently has the best energy of any show I’ve done.

Last week, I joined Prime to discuss a recent article called, “Why x86 Needs to Die”. You can watch a (very lightly) edited VOD of the complete stream here:

Prime’s show is intended for a general programming audience, and as such, we didn’t go into too much detail. But I feel like we did a good job covering the basics, and explaining why the article was — at best — misleading.

As I say at the end of the stream, I do think there are things about x641 that can be improved. But the article seems to be suggesting that the bad parts of x64 are caused by things that have little or nothing to do with the actual problems facing today’s x64 chip architects as I understand them.

Along those lines, folks who subscribe to my performance-aware programming course know a lot about microarchitecture analysis already, and may want to go a bit deeper on this topic. For those interested, I’ve written up some additional context below.

Memory Addressing

At the beginning of the stream, I object to the suggestion that the CPU’s ability to “address memory directly” (the author’s words, not mine) in Real Mode is somehow creating a lot of legacy maintenance for modern x64. Immediately after, I make an off-hand comment that if you were going to complain about maintaining something from the x86 memory model, you’d complain about segmenting (or something to that effect).

If you’re wondering what I was talking about, the main 8086 Real Mode “backwards compatibility” things that create potential problems for x64 have little to do with direct memory access. To the extent they involve memory access at all, it’s because the memory address calculation model was different, and involved more, 16-bit segment registers (with 16-bit wrapping). Removing the requirement to maintain and correctly compute addresses with these registers is a potential simplification, and that’s what I was referring to.

But you don’t have to take my word for it. Intel has already told us what it would like to simplify in x64 by removing 16- and 32-bit compatibility. We don’t have to guess!

If you read Envisioning A Simplified Intel Architecture, and the companion architecture proposal, you can see precisely what Intel thinks they would benefit from removing. The older style of segmented addressing is one of the things they listed. Others are things like unused privilege ring levels and so on. From the summary:

So that’s what I was talking about. And of course, to my knowledge, removing the ability to “address memory directly” appears nowhere in the document.

If you read this Intel proposal (which I did, back around when it was originally posted), hopefully you can see why the “Why x86 Needs to Die” discussion of Real Mode sounds fishy. Not only has Intel already proposed a relatively straightforward path to removing it, but it’s not really a big priority for anyone with respect to CPU performance. Removing some microcode, simplifying the init sequence, etc., are all things that probably should be done, but nobody expects this to make x64 CPUs perform significantly faster than they already do on most workloads2.

And more importantly, as I just linked to, there is already a proposal to cleanly remove them from x64 — without x86 “dying” and being replaced by ARM and RISC-V as the article suggests.

Shifts Affecting Flags

At another point in the video, I talk about how there are issues with x64 instruction encoding that make it less efficient to decode large numbers of instructions at the same time. I talk about this being the main thing that you would want to fix about x64: rearranging some of the bit patterns to make it simpler to decode a large number of sequential instructions in parallel.

But when I say that, I also say there are a handful of other things you might fix, like “shifts affecting flags”. All I meant to do here was suggest an example of a simplification you could do to x64 that would remove some unnecessary complexity on the hardware (and software) side.

To be specific, as many of my viewers already know from Part 1 of the course, conditional instructions are often based on a “flags” model. Instructions set flags indicating the results of their operations, like ZF (the result was zero) or CF (the result overflowed). Later instructions can take action based on these flags, like conditionally jumping to another part of the code.

This design creates a lot of “unnecessary dependencies”, in that many instructions you might do (such as shifting a value) are defined to modify the flags whether you intended to use the resulting flag value or not. So not only does the CPU have to track the changes to the flags across operations even though you may not have cared about those changes, you (or more likely, the compiler) also have to be careful not to use an instruction that overwrites the flags in between a flag change you did care about and the subsequent conditional instruction that depends on it.

So, one potential modification to the x64 architecture that would eliminate this unnecessary complexity is to provide instructions for things like shifts that don’t affect the flags. That way, when a programmer doesn’t intend to modify the flags, they use the variant of shift that leaves the flags alone.

Once again, though, you don’t have to take my word for this! As before, Intel already proposed an instruction set extension which fixes this, among other things. These changes to x64 are called the Advanced Performance Extensions, and they already have a complete architecture specification. It includes the ability to suppress flag writes for many common instructions, as well as a host of other performance-targeted features, many of which have far more potential to improve performance than flag suppression.

So, Does x86 Need to Die?

For the most part, I don’t really care about the answer to this question. I wanted to join Prime in “reacting” to the original article primarily because I thought it misrepresented a lot of aspects of modern CPU architecture, at least insofar as we software developers understand them. I also wanted to push back on the suggestion that “CISC vs. RISC” was a useful analysis framework (it’s not, as Chips and Cheese explained very well in their own response to the original article).

What I do care about is being clearer about how modern microarchitectures work, and making sure people understand that although there are issues with x64 (as there are with any instruction set), they have surprisingly little to do with a “RISC good, CISC bad” — or even a “legacy vs. modern” — dichotomy.

That said, on the measure, I would disagree that x86 “needs to die”. The reason I say that is because x86 (now x64) has done a remarkable job stabilizing software development over the past forty years. Sure, you can call that “legacy” or a “maintenance problem” if you want to look at the downsides. But you could also call it a remarkably flexible instruction set if you’d like to look at the upsides.

If, for example, we were to turn the “crapping on someone else’s ISA” hose around, and point it at RISC-V, we certainly could. Even though it is essentially brand new, we can already see it failing to do something x86 has done trivially many times.

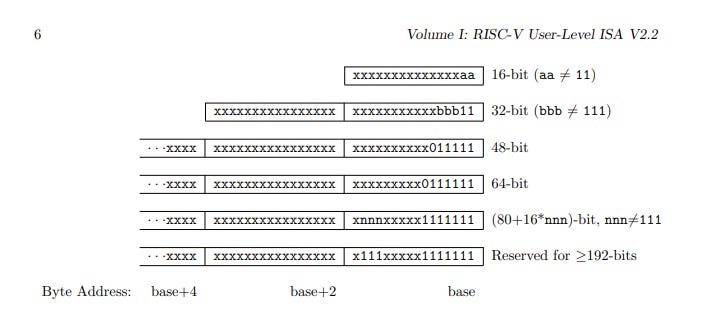

In their recent RISC-V V extension — a crucial extension that finally adds vector support to the ISA so that it can try to be competitive in computationally intensive workloads — they already ended up with a fairly bad instruction set in version 1. This was at least in part because they decided not to use variable-length encodings for the instructions.

As a result, despite all modern SIMD/vector instruction sets (AVX-512, ARM SVE, your favorite GPU’s custom ISA, etc.) having the ability to mask instructions against your choice of mask register, RISC-V V only allows masking to be toggled on or off. When on, the mask is always read from v0, and no other masks are available.

Why? Because they couldn’t find enough bits in their 32-bit instruction encoding to reference any more than that! So instructions are either masked or not, based on the single bit they had room to encode. By contrast, when AVX-512 introduced masking into the x64 instruction set, it supported selecting from 8 mask registers. As usual, x64 architects just ate the cost of having to support more elaborate instruction decoding.

So rather than thinking of it as “fixed-size instructions good, variable-length instructions bad”, I would encourage people to think of this as what it is: a tradeoff. There is a cost to maintaining a compact, consistent instruction encoding. It makes it significantly harder to respond to changes in the industry and the computing landscape. It makes it harder for IHVs to provide their customers with timely new instructions that properly reflect the needs of emerging high-performance software domains. Either you sacrifice instruction quality (like RISC-V V did), or you go the same route as x86/x64 and require more complex decoder logic.

I strongly suspect an eventual RISC-V V revision will do precisely this, in fact! RISC-V does support variable-length encoding, and I wouldn’t be surprised if, once it’s around for even a fraction of the lifespan of x86, it will need to make heavier use of its variable-length encoding scheme if it wants to become performance-competitive.

As I say in the video, none of this is to suggest that x64 is some kind of optimal instruction encoding. Far from it. Anything that has to evolve slowly over many years is destined to have concessions to that evolutionary process, and as the APX specification itself demonstrates, x64 architects themselves are well aware of that.

But I do think the original article was way off base. ARM and RISC-V aren’t particularly special. They’re not dramatically better than x64, and in some ways, you could argue that they are worse (especially RISC-V, despite it having the benefit of both x64 and ARM having already gone before it). There are plenty of things that could be improved in x64, but there are constantly extensions that do just that. Similarly, there are a lot of ways that new instruction sets like RISC-V could lose their simplicity over time if they need to make changes to become competitive in higher performance classes.

Furthermore, if x86/64 does end up dying out, I don’t think it will have much to do with its design. The much more likely reason that x64 processors could get phased out over the next few decades is much more about licensing than it is about anything else.

ARM can be licensed by anyone, and RISC-V is effectively free. Those are compelling business reasons to choose either over x64, and have already lead to many more IHVs providing ARM and RISC-V processors than currently provide x64 processors.

So do I think x64 “needs to die”? Definitely not. But more importantly, if it does, I don’t think it will be because the ISA couldn’t evolve. I strongly suspect x64’s eventual demise will be for business reasons, not technical ones.

If you like the sort of architectural explanations I provided on the stream with Prime, that’s precisely the sort of thing I do here every week! You can subscribe to the free version of this site, and also see paid subscription options, using the email box below:

Confusingly, the original article says “Why x86 Needs to Die”, but is specifically about why x64 needs to die and be replaced by ARM and RISC-V. This makes it a little hard to refer to the article and also be precise about what I’m referring to in this article. To avoid confusion, I’m going to use x64 everywhere, since that’s generally what we’re talking about, and also what the original article was talking about even though they keep referring to modern x64 as “x86”.

Without getting too far into the weeds, the security concerns mentioned in the original article also seem pretty far off base. The author claims that things like “speculative execution” are a problem for x64, as if that has something to do with the legacy of the x86 instruction set. But all high-performance CPUs now use speculative execution, including the high-performance ARM core you probably have in your mobile phone.

Rather, the kinds of security concerns that one could imagine alleviating would be things like simplifying the APIC by removing legacy options. This might decrease the likelihood of APIC-related security vulnerabilities (of which there have been several), since there’d be less total surface area to think about. Again, precisely such a simplification is already contemplated by the “Envisioning a Simplified Intel Architecture” proposal.

Cannot really add anything valuable to this discussion since my experience is too little, just wanted to say thank you for your tremendous effort because you are, and i think by far, the best teacher I have had on this and your course about performance aware programming i have recently started is just overwhelmingly amazing and I enjoy every second of it. It sparks a joy in programming that i have seemed to bury in the years of web development i have lost myself in haha

Incredible Stuff Casey!

Original Spectre paper explicitly mentions ARM and RISC-V as ISA's that can be vulnerable to speculative attacks: https://spectreattack.com/spectre.pdf

Of course actual hw implementation matters, it is not a property of ISA.