In each Q&A video, I answer questions from the comments on the previous Q&A video, which can be from any part of the course.

Questions addressed in this video:

[00:02] “why for the 128 bit and 256 bit tests you made the dummy reads on separate registers instead of the same register. like you did vmovdqu xmm0 and then xmm1? is there additional optimization applied when doing SIMD on the backend side?”

[01:55] “Hi, some adhere to the dogma that "stack allocation is more performant than heap allocation" and thus minimize their use of heap allocation. My understanding of memory is that stack allocation involves merely pointer manipulation, while heap allocation requires system calls. However, memory is memory, regardless of whether it's on the stack or the heap. In cases where the needed memory is allocated only once at the start of a program, should we still care about stack or heap? Do you know of any performance-related reasoning that could justify preferring stack allocation instead of heap allocation? For instance, is the decoding of instructions manipulating the stack (such as push and pop) faster than that of mov instructions?”

[10:21] “With this approach for bandwidth testing, can we do something to measure NOT power-of-two working set sizes? Otherwise, this leaves us with only about ~34 datapoints, if we go up to 16GiB. My understanding is that the power-of-two requierment comes from wanting a fast modulo, but how slow is division by a non-pow2 a constant in practice? Maybe we can make it fast enough so that the loads are still firing at full speed?”

[13:33] “- I can imagine the whole "downconverting" danger you were mentioning would actually happen by using B32 if you used it as a bitfield in a struct.

- Because "true" is represented with lots of different bit patterns, if you wanted to compare two B32s, writing it like a == b won't get the logical semantics one might hope for - you have to remember to do some extra typing. This also applies when memory-comparing two instances of a struct, as they may be semantically equivalent but not bit-for-bit-matching, as well as when hashing a struct, for the same reason.

Do you find yourself just not caring about these cases very much?”

[20:32] “Hi, I have a question regarding "Cache size and bandwidth testing" homework. On my CPUs specification I can see that a single core can access 32KiB of the L1 cache, and my tests confirm this because I can load exactly 32KiB and still get good bandwidth. But when testing for L2 cache (which is 512KiB), I can't load 512KiB at same speed as 256KiB, it is getting a bandwidth that of the L3 cache.

Is this because the L1 has separate caches for data and instructions, so I can fully utilize the data part of the L1 cache? But this is not the case for L2, because instructions and data are shared on the L2 cache?”

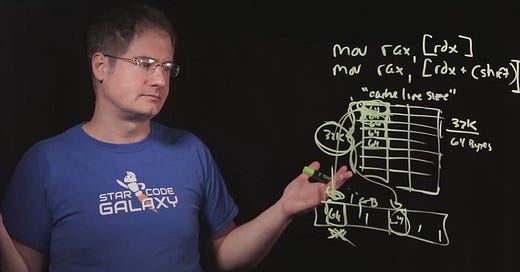

[22:59] “I tried to do the ‘challenge’ version of the bandwidth testing homework. My initial attempt didn't work but I don't understand why.

My first idea was very simple : a loop repeateadly reading two different adresses that are separated by a certain fixed amount, but those two adresses are always the same as the loop iterates.

mov rax, [rdx]

mov rax, [rdx + <shift>]

(without changing rdx inside the loop)

My understanding was that, even if I always read the same two places, if <shift> is big enough, those two places could not BOTH be in the L1 cache, so I should see the extra cost of retrieving say the second one.

But it didn't work :) Even with a 1GB shift between the two adresses, I got the same performance as with a very small shift.

Thinking about it made me realize that I don't exactly understand how cache operates. Imagine my first address is actually in L1 and my second is in say main memory. Will the chip read that second one from the memory (and pay the bandwidth cost) ? Or load that corresponding memory region into L1 ? (so it can be access faster now, and later if needed)

I guess chips have some kind of policy to decide this ‘read from distant memory’ vs ‘bring that distant memory segment closer to the chip’”

[34:06] “Why doing 3 loads in an iteration can be slightly faster than 2 loads on a CPU with 2 load units?”

[35:28] “I had an interesting result with my cache sizing and bandwidth testing homework under Linux on a Zen4 chip. My initial implementation mapped 1GB of memory for the tests and then ran the tests immediately reading from that block. When it reached the sizes definitely outside of the L3 cache I was still seeing results that matched what I was seeing for the L3 cache.

Noticing that Linux was reporting the memory usage as very low while the test was running I added some code to write a byte into each page of the 1GB block of memory before running the test to fault them in, it then reported the expected memory usage of 1GB and the performance dropped off as expected after exceeding the L3 cache size.

My theory is that Linux is mapping the entire 1GB range to a single physical page which is filled with 0s and then faults on write to allocate the memory for real. Does that sound about right? If that's the case, I would have thought the behavior to be more like the L1 cache if the pages are pointing to the same physical page.”

[40:00] “do you think that since simd requires such specific data formats to be used and can be so cumbersome - would it be a win of modern vendors implemented scatter gather instructions even if that meant the rest of the simd capabilities were less powerful?”